- SPACY PART OF SPEECH TAGGER HOW TO

- SPACY PART OF SPEECH TAGGER PDF

- SPACY PART OF SPEECH TAGGER INSTALL

- SPACY PART OF SPEECH TAGGER SERIES

Create a new document using the following script: sentence3 = sp( u'"They\'re leaving U.K. TokenizationĪs explained earlier, tokenization is the process of breaking a document down into words, punctuation marks, numeric digits, etc. Let's now dig deeper and see Tokenization, Stemming, and Lemmatization in detail. In this section, we saw a few basic operations of the spaCy library. In the output, you will see True since the token The is used at the start of the second sentence. Now to see if any sentence in the document starts with The, we can use the is_sent_start attribute as shown below: document.is_sent_start Keep in mind that the index start from zero, and the period counts as a token. In the above script, we are searching for the 5th word in the document. You can get individual tokens using an index and the square brackets, like an array: document You can also check if a sentence starts with a particular token or not. The output of the script looks like this: Hello from Stackabuse. Now, we can iterate through each sentence using the following script: for sentence in nts: In addition to printing the words, you can also print sentences from a document. The depenency parser has broken it down to two words and specifies that the n't is actually negation of the previous word.įor a detailed understanding of dependency parsing, refer to this article. The output looks like this: Manchester PROPN compoundįrom the output, you can see that spaCy is intelligent enough to find the dependency between the tokens, for instance in the sentence we had a word is'nt.

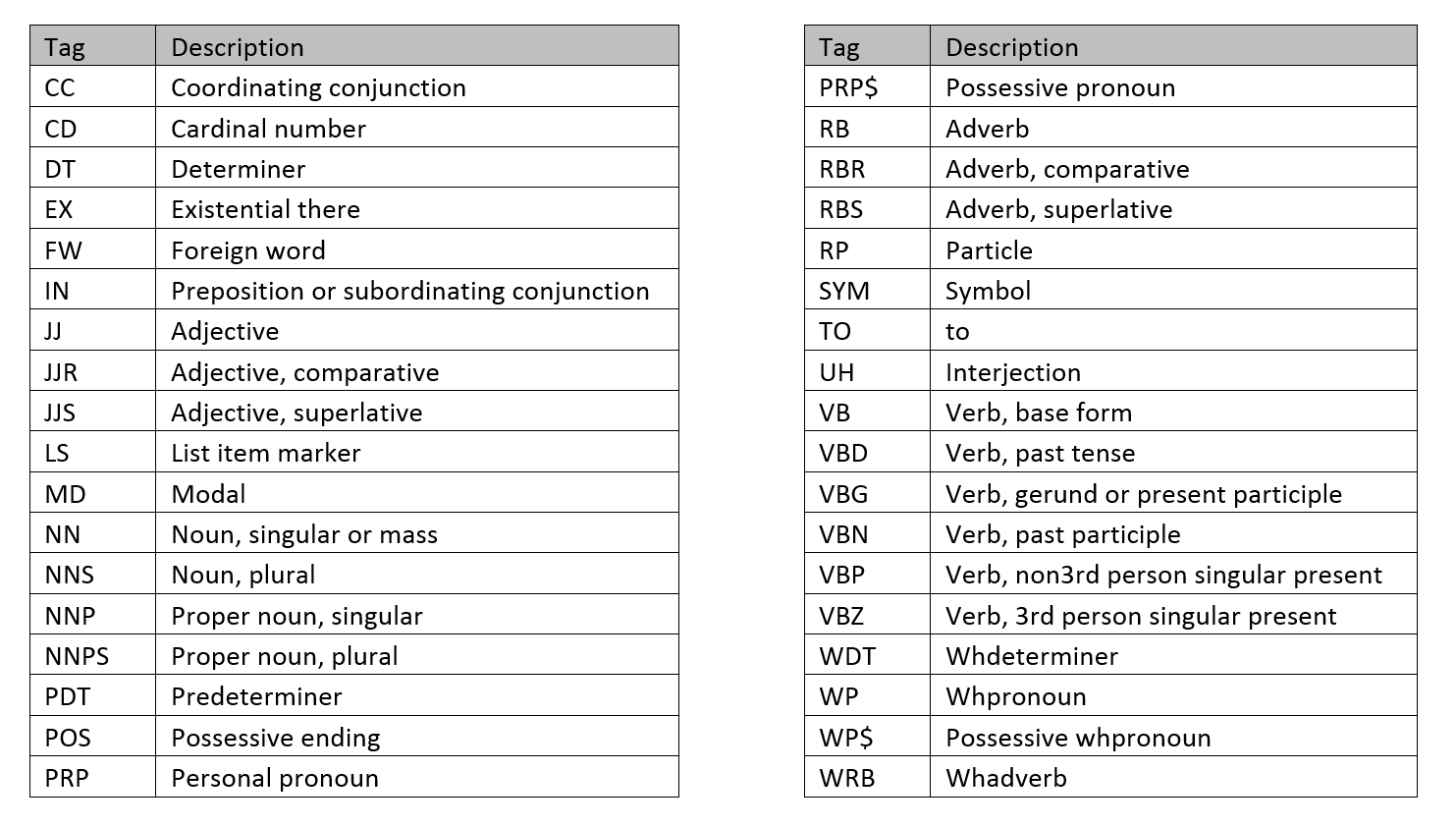

Let's create another document: sentence2 = sp( u"Manchester United isn't looking to sign any forward.")įor dependency parsing, the attribute dep_ is used as shown below: for word in sentence2: For instance "Manchester" has been tagged as a proper noun, "Looking" has been tagged as a verb, and so on.įinally, in addition to the parts of speech, we can also see the dependencies. You can see that each word or token in our sentence has been assigned a part of speech. pos_ attribute shown below: for word in sentence: We can also see the parts of speech of each of these tokens using the. You can see we have the following tokens in our document. The output of the script above looks like this: Manchester Let's see what tokens we have in our document: for word in sentence: SpaCy automatically breaks your document into tokens when a document is created using the model.Ī token simply refers to an individual part of a sentence having some semantic value. sentence = sp( u'Manchester United is looking to sign a forward for $90 million') The following script creates a simple spaCy document. A document can be a sentence or a group of sentences and can have unlimited length. Let's now create a small document using this model. In the script above we use the load function from the spacy library to load the core English language model. Next, we need to load the spaCy language model.

SPACY PART OF SPEECH TAGGER HOW TO

The following command downloads the language model: $ python -m spacy download enīefore we dive deeper into different spaCy functions, let's briefly see how to work with it.Īs a first step, you need to import the spacy library as follows: import spacy The language model is used to perform a variety of NLP tasks, which we will see in a later section. We will be using the English language model.

SPACY PART OF SPEECH TAGGER INSTALL

Once you download and install spaCy, the next step is to download the language model. Otherwise if you are using Anaconda, you need to execute the following command on the Anaconda prompt: $ conda install -c conda-forge spacy If you use the pip installer to install your Python libraries, go to the command line and execute the following statement: $ pip install -U spacy However, we will also touch NLTK when it is easier to perform a task using NLTK rather than spaCy.

SPACY PART OF SPEECH TAGGER SERIES

In this series of articles on NLP, we will mostly be dealing with spaCy, owing to its state of the art nature. NLTK was released back in 2001 while spaCy is relatively new and was developed in 2015.

The basic difference between the two libraries is the fact that NLTK contains a wide variety of algorithms to solve one problem whereas spaCy contains only one, but the best algorithm to solve a problem. The spaCy library is one of the most popular NLP libraries along with NLTK. In this article, we will start working with the spaCy library to perform a few more basic NLP tasks such as tokenization, stemming and lemmatization.

SPACY PART OF SPEECH TAGGER PDF

We saw how to read and write text and PDF files. In the previous article, we started our discussion about how to do natural language processing with Python.

0 kommentar(er)

0 kommentar(er)